The question that I get the most from new and experienced machine learning engineers is “how can I get higher accuracy?”

Cheatsheets enumerating everything about convolutional neural systems,recurrent neural networks, as well as the DL tips and traps to have at the top of the priority list when preparing a deep learning model. All components of the above joined in an ultimate arrangement of ideas, to have with you consistently. Transfer learning Training a deep learning model requires a lot of data and more importantly a lot of time. It is often useful to take advantage of pre-trained weights on huge datasets that took days/weeks to train, and leverage it towards our use case. Depending on how much data we have at hand, here are the different ways to leverage this.

Makes a lot of sense since the most valuable part of machine learning for business is often its predictive capabilities. Improving the accuracy of prediction is an easy way to squeeze more value from existing systems.

The guide will be broken up into four different sections with some strategies in each.

- Data Optimization

- Algorithm tuning

- Hyper-Parameter Optimization

- Ensembles, Ensembles, Ensembles

Not all of these ideas will boost model performance, and you will see limited returns the more of them you apply to the same problem.

Still, stuck after trying a few of these? That indicates you should rethink the core solution to your business problem. This article is just a deep learning performance cheat sheet, so I’m linking you to more detailed sources of information in each section.

Data Optimization

Balance your data set

One of the easiest ways to increase performance for underperforming deep learning models is to balance your dataset if your problem is classification. Often real-world data sets are skewed, and if you want the best accuracy you want your deep learning system to learn how to pick between two classes based on the characteristics not by copying its distribution

Common methods include:

- Subsample Majority Class: You can balance the class distributions by subsampling the majority class.

- Oversample Minority Class: Sampling with replacement can be used to increase your minority class proportion.

More Data

Many of us are familiar with this graph. It shows the relationship between the amount of data and performance for both deep learning and classical machine learning approaches. If you are not, then the lesson is clear and straightforward. If you want better performance for your model, you need more data. Depending on your budget you might opt for creating more labeled data or collecting more unlabeled data and training your feature extraction sub-model more.

Open Source Labeling Software

Generate More Data

Or Fake it, till you make it. An often ignored method of improving accuracy is creating new data from what you already have. Take for example photos; often engineers will create more images by rotating and randomly shifting existing images. Such transformations also increase the reduced overfitting of the training set.

Algorithm Tuning

Copy The Researchers

Are you working on a problem that has lots of research behind it? You are in luck because 100’s of engineers might have already put a bunch of thought into how to get the best accuracy for this problem. Read some research papers on the topic and take note of the different methods they used to get results! They might even have a git-hub of their code for you to sink your teeth into.

Google Scholar is an excellent place to start your search. They offer many tools to help you find related research as well.

For storage and organization of research papers, I use Mendeley

Algorithm spot check

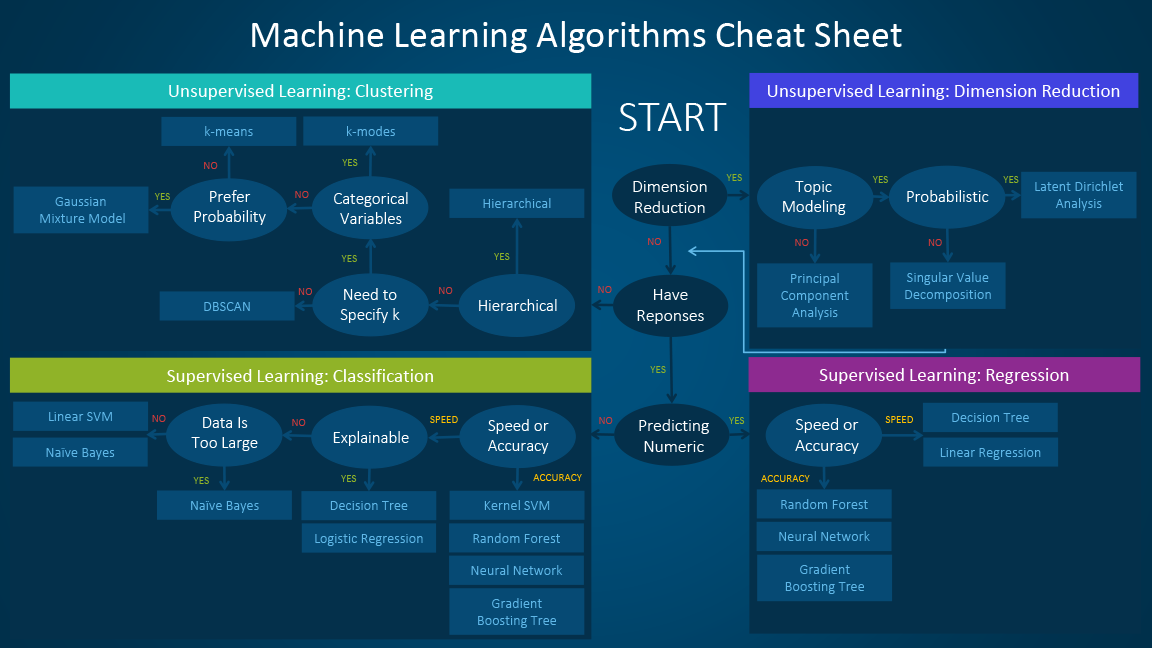

Don’t let your ego get the best of you. It’s impossible to know which machine learning algorithm will work best for your problem. Whenever I attack a new problem, with not much in the way of research behind it, I look at a few methods available and try all of them. Deep learning (CNN’s, RNN’s, etc.) and classical machine learning approaches (Random Forests, Gradient Boosting, etc.)

Rank the results of all your experiments and double down on the algorithms that perform the best.

Hyper-Parameter Optimization

Learning rates

The Adam optimization algorithm is tried and true. Often giving amazing results on all deep learning problems. Even with Its fantastic performance it still can get you stuck in a local minimum for your problem. An even better algorithm that has the benefits of Adam and helps eliminate the chance of getting stuff in a local minimum is Stochastic Gradient Descent with Warm Restarts.

Batch size and number of epochs

A standard procedure is using large batch sizes with a large number of epochs for modern deep learning implementations, but common strategies yield common results. Experiment with the size of your batches and the number of training epochs.

Early Stopping

This is an excellent method for reducing the generalization error of your deep learning system. Continual training might improve accuracy on your data set, but at a certain point, it starts to reduce the model’s accuracy on data not yet seen by the model. To improve real-world performance try early stopping.

Network Architecture

If you want to try something a little more interesting, you can give Efficient Neural Architecture Search (ENAS) a try. This algorithm will create a custom network design that will maximize accuracy on your dataset and is way more efficient than the standard Neural architecture search that cloud ML uses.

Regularization

A robust method to stop overfitting is to use regularization. There are a couple of different ways to use regularization that you can train on your deep learning project. If you haven’t tried these methods yet I would start to include them in every project you do.

- Dropout: They randomly turn off a percentage of neurons during training. Dropout helps prevent groups of neurons from all overfitting to themselves.

- Weight penalty L1 and L2: Weights that explode in size can be a real problem in deep learning and reduce accuracy. One of the ways to combat this is to add decay to all weights. These try to keep all of the weights in the networks as small as possible unless there are large gradients to counteract it. On top of often increasing performance, it has the benefit of making the model easier to interpret.

Ensembles, Ensembles, Ensembles

Having trouble picking the best model to use? Often you can combine the outputs from the different models and get better accuracy. There are two steps for every one of these algorithms.

- Producing a distribution of simple ML models on subsets of the original data

- Combining the distribution into one “Aggregated” model

Combined Models/Views (Bagging)

In this method, you train a few different models, which are different in some way, on the same data and you average out the outputs to create the final output. Bagging has the effect of reducing variance in the model. You can intuitively think of it as having multiple people with different backgrounds thinking about the same problem but with different starting positions. Just as on a team this can be a potent tool for getting the right answer.

Stacking

It’s similar to bagging the difference here is that you don’t have an empirical formula for your combined output. You create a meta-level learner that based on the input data chooses how to weigh the answers from your different models to produce the final output.

Still having issues?

Reframe Your Problem

Take a break from looking at your screen and get a coffee. This solution is all about rethinking your problem from the beginning. I find it helps to sit down and start brainstorming different ways that you could solve the problem. Maybe start by asking your self some simple questions:

- Can my classification problem become a regression problem or the reverse?

- Can you break down your problem any smaller?

- Are there any observations that you have collected about your data that could change the scope of the problem?

- Can your binary output become a softmax output or vice versa?

- Are you looking at this problem in the most efficient way?

Rethinking your problem can be the hardest of the methods to increase performance but it often the one that yields the best results. It helps to chat with someone that has experience in deep learning and can give you a fresh take on your problem.

If you would like to chat with someone, I am making myself self-available for the next month to have a 30-minute conversation with you about your project. I’m charging 5 dollars for this 30-minute call as a barrier to keep those out who are not serious from wasting our time. Sign up for a time slot

Also read: Top 6 Cheat Sheets Novice Machine Learning Engineers Need

Thanks for reading If you enjoyed the post, share the article with anyone you think needs it. Let’s also connect on Twitter, LinkedIn, or follow me on Medium.

Part 0: Intro

Why

Deep Learning is a powerful toolset, but it also involves a steep learning curve and a radical paradigm shift.

For those new to Deep Learning, there are many levers to learn and different approaches to try out. Even more frustratingly, designing deep learning architectures can be equal parts art and science, without some of the rigorous backing found in longer studied, linear models.

In this article, we’ll work through some of the basic principles of deep learning, by discussing the fundamental building blocks in this exciting field. Take a look at some of the primary ingredients of getting started below, and don’t forget to bookmark this page as your Deep Learning cheat sheet!

FAQ

What is a layer?

A layer is an atomic unit, within a deep learning architecture. Networks are generally composed by adding successive layers.

What properties do all layers have?

Almost all layers will have :

- Weights (free parameters), which create a linear combination of the outputs from the previous layer.

- An activation, which allows for non-linearities

- A bias node, an equivalent to one incoming variable that is always set to

1

What changes between layer types?

There are many different layers for many different use cases. Different layers may allow for combining adjacent inputs (convolutional layers), or dealing with multiple timesteps in a single observation (RNN layers).

Difference between DL book and Keras Layers

Frustratingly, there is some inconsistency in how layers are referred to and utilized. For example, the Deep Learning Book commonly refers to archictures (whole networks), rather than specific layers. For example, their discussion of a convolutional neural network focuses on the convolutional layer as a sub-component of the network.

1D vs 2D

Deep Learning Cheat Sheet 5th

Some layers have 1D and 2D varieties. A good rule of thumb is:

- 1D: Temporal (time series, text)

- 2d: Spatial (image)

Cheat sheet

Part 1: Standard layers

Input

- Simple pass through

- Needs to align w/ shape of upcoming layers

Deep Learning Interview Cheat Sheet

Embedding

- Categorical / text to vector

- Vector can be used with other (linear) algorithms

- Can use transfer learning / pre-trained embeddings(see example)

Dense layers

- Vanilla, default layer

- Many different activations

- Probably want to use ReLu activation

Dropout

- Helpful for regularization

- Generally should not be used after input layer

- Can select fraction of weights (

p) to be dropped - Weights are scaled at train / test time, so average weight is the same for both

- Weights are not dropped at test time

Part 2: Specialized layers

Convolutional layers

- Take a subset of input

- Create a linear combination of the elements in that subset

- Replace subset (multiple values) with the linear combination (single value)

- Weights for linear combination are learned

Time series & text layers

- Helpful when input has a specific order

- Time series (e.g. stock closing prices for 1 week)

- Text (e.g. words on a page, given in a certain order)

- Text data is generally preceeded by an embedding layer

- Generally should be paired w/

RMSpropoptimizer

Simple RNN

- Each time step is concatenated with the last time step's output

- This concatenated input is fed into a dense layer equivalent

- The output of the dense layer equivalent is this time step's output

- Generally, only the output from the last time step is used

- Specially handling for the first time step

LSTM

- Improvement on Simple RNN, with internal 'memory state'

- Avoid issue of exploding / vanishing gradients

Utility layers

- There for utility use!