The basic commands limit what security questions can be asked and answered. Alerts created with only these are often more static than can be useful. Streaming vsGenerating commands Basic Search Limitations Stats can be your best friend, but it shouldn’t be your only one. The Search Command Cheat Sheet is a quick command reference complete with descriptions and examples. The Search Command Cheat Sheet is also available for download as an eight-page PDF file. Note: In the examples on this page, a leading ellipsis (.) indicates that there is a.

One of the most powerful features of Splunk, the market leader in log aggregation and operational data intelligence, is the ability to extract fields while searching for data. Unfortunately, it can be a daunting task to get this working correctly. In this article, I’ll explain how you can extract fields using Splunk SPL’s rex command. I’ll provide plenty of examples with actual SPL queries. In my experience, rex is one of the most useful commands in the long list of SPL commands. I’ll also reveal one secret command that can make this process super easy. By fully reading this article you will gain a deeper understanding of fields, and learn how to use rex command to extract fields from your data.

What is a field?

A field is a name-value pair that is searchable. Virtually all searches in Splunk uses fields. A field can contain multiple values. Also, a given field need not appear in all of your events. Let’s consider the following SPL.

The fields in the above SPL are “index”, “sourcetype” and “action”. The values are “main”, “access_combined_wcookie” and “purchase” respectively.

Fields turbo charge your searches by enabling you to customize and tailor your searches. For example, consider the following SPL

The above SPL searches the index web which happens have web access logs, with sourcetype equal to access_combined, status grater than or equal to 500 (indicating a server side error) and response_time grater than 6 seconds (or 6000 milli seconds). This kind of flexibility in exploring data will never be possible with simple text searching.

How are fields created?

There is some good news here. Splunk automatically creates many fields for you. The process of creating fields from the raw data is called extraction. By default Splunk extracts many fields during index time. The most notable ones are:

index

host

sourcetype

source

_time

_indextime

splunk_server

You can configure Splunk to extract additional fields during index time based on your data and the constraints you specify. This process is also known as adding custom fields during index time. This is achieved through configuring props.conf, transforms.conf and fields.conf. Note that if you are using Splunk in a distributed environment, props.conf and transforms.conf reside on the Indexers (also called Search Peers) while fields.conf reside on the Search Heads. And if you are using a Heavy Forwarder, props.conf and transforms.conf reside there instead of Indexers.

While index-time extraction seems appealing, you should try to avoid it for the following reasons.

- Indexed extractions use more disk space.

- Indexed extractions are not flexible. i.e. if you change the configuration of any of the indexed extractions, the entire index needs to be rebuilt.

- There is a performance impact as Indexers do more work during index time.

Instead, you should use search-time extractions. Schema-on-Read, in fact, is the superior strength of Splunk that you won’t find in any other log aggregation platforms. Schema-on-Write, which requires you to define the fields ahead of Indexing, is what you will find in most log aggregation platforms (including Elastic Search). With Schema-on-Read that Splunk uses, you slice and dice the data during search time with no persistent modifications done to the indexes. This also provides the most flexibility as you define how the fields should be extracted.

Many ways of extracting fields in Splunk during search-time

There are several ways of extracting fields during search-time. These include the following.

- Using the Field Extractor utility in Splunk Web

- Using the Fields menu in Settings in Splunk Web

- Using the configuration files

- Using SPL commands

- rex

- extract

- multikv

- spath

- xmlkv/xpath

- kvform

For Splunk neophytes, using the Field Extractor utility is a great start. However as you gain more experience with field extractions, you will start to realize that the Field extractor does not always come up with the most efficient regular expressions. Eventually, you will start to leverage the power of rex command and regular expressions, which is what we are going to look in detail now.

What is rex?

rex is a SPL (Search Processing Language) command that extracts fields from the raw data based on the pattern you specify using regular expressions.

The command takes search results as input (i.e the command is written after a pipe in SPL). It matches a regular expression pattern in each event, and saves the value in a field that you specify. Let’s see a working example to understand the syntax.

Consider the following raw event.

Thu Jan 16 2018 00:15:06 mailsv1 sshd[5801]: Failed password for invalid user desktop from 194.8.74.23 port 2285 ssh2

The above event is from Splunk tutorial data. Let’s say you want to extract the port number as a field. Using the rex command, you would use the following SPL:

index=main sourcetype=secure

| rex 'ports(?d+)s'

Once you have port extracted as a field, you can use it just like any other field. For example, the following SPL retrieves events with port numbers between 1000 and 2000.

index=main sourcetype=secure | rex 'ports(?d+)s'

| where portNumber >= 1000 AND portNumber < 2000

Note: rex has two modes of operation. The sed mode, denoted by option mode=sed lets you replace characters in an existing field. We will not discuss sed more in this blog.

Note: Do not confuse the SPL command regex with rex. regex filters search results using a regular expression (i.e removes events that do not match the regular expression provided with regex command).

Syntax of rex

Let’s unpack the syntax of rex.

rex field=<field> <PCRE named capture group>

The PCRE named capture group works the following way:

(?<name>regex)

The above expression captures the text matched by regex into the group name.

Note: You may also see (?P<name>regex) used in named capture groups (notice the character P). In Splunk, you can use either approach.

If you don’t specify the field name, rex applies to _raw (which is the entire event). Specifying a field greatly improves performance (especially if your events are large. Typically I would consider any event over 10-15 lines as large).

There is also an option named max_match which is set to 1 by default i.e, rex retains only the first match. If you set this option to 0, there is no limit to the number of matches in an event and rex creates a multi valued field in case of multiple matches.

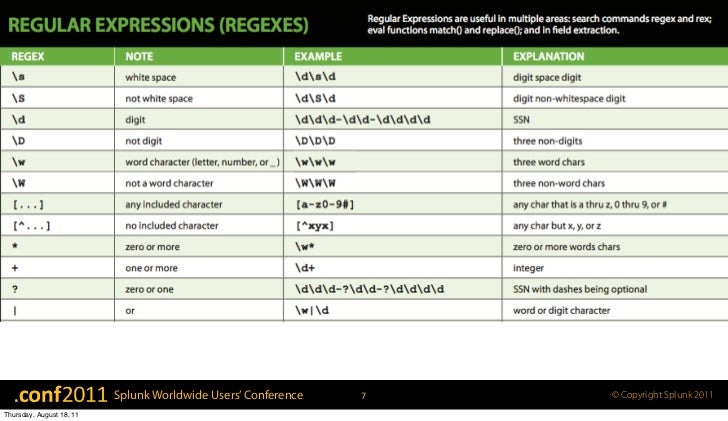

As you can sense by now, mastering rex means getting a good handle of Regular Expressions. In fact, it is all out regular expressions when it comes to rex. It is best learned through examples. Let’s dive right in.

Learn rex through examples

Extract a value followed by a string

Raw Event:

Extract a field named username that is followed by the string user in the events.

index=main sourcetype=secure

| rex 'users(?w+)s'

Isn’t that beautiful?

Now, let’s dig deep in to the command and break it down.

Extract a value based a pattern of the string

This can be super handy. Extract java exceptions as a field.

Raw Event:

08:24:42 ERROR : Unexpected error while launching program.

java.lang.NullPointerException

at com.xilinx.sdk.debug.core.XilinxAppLaunchConfiguration

Delegate.isFpgaConfigured(XilinxAppLaunchConfigurati

onDelegate.java:293)

Extract any java Exception as a field. Note that java exceptions have the form java.<package hierarchy>.<Exception>. For example:

java.lang.NullPointerException

java.net.connectexception

javax.net.ssl.SSLHandshakeException

So, the following regex matching will do the trick.

java..*Exception

Explanation:

java: A literal string java

. : Backslash followed by period. In regex, backslash escapes the following character, meaning it will interpret the following character as it is. Period (.) stands for any character in regex. In this case we want to literally match a period. So, we escape it.

.* : Period followed by Star (*). In regex, * indicates zero or more of the preceding character. Simply .* means anything.

Exception: A literal string Exception.

Our full blown SPL looks like this:

index=main sourcetype=java-logs

| rex '(?<javaException>java..*Exception)'

Let’s add some complexity to it. Let’s say you have exceptions that look like the following:

javax.net.ssl.SSLHandshakeException

Notice the “x” in javax ? How can we account for x ? Ideally what we want is to have rex extract the java exception regardless of javax or java. Thanks to the character class and “?” quantifier.

java[x]?..*Exception

Let us consider new raw events.

Our new SPL looks like this:

index=main sourcetype=java-logs1

| rex '(?<javaException>java[x]?..*Exception)'

That’s much better. Our extracted field javaException captured the exception from both the events.

Wait a minute. Is something wrong with this extraction,

Apparently, the extraction captured two exceptions. The raw event looks like this:

08:24:43 ERROR : javax.net.ssl.SSLHandshakeException: sun.security.validator.ValidatorException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target

Apparently the regex java[x]?..*Exception is matching all the way up to the second instance of the string “Exception”.

This is called greedy matching in regex. By default the quantifiers such as “*” and “+” will try to match as many characters as possible. In order to force a ‘lazy’ behaviour, we use the quantifier “?”. Our new SPL looks like this:

index=main sourcetype=java-logs1

| rex '(?<javaException>java[x]?..*?Exception)'

That’s much much better!

Extract credit card numbers as a field

Let’s say you have credit card numbers in your log file (very bad idea). Let’s say they all the format XXXX-XXXX-XXXX-XXXX, where X is any digit. You can easily extract the field using the following SPL

index='main' sourcetype='custom-card'

| rex '(?<cardNumber>d{4}-d{4}-d{4}-d{4})'

The {} helps with applying a multiplier. For example, d{4} means 4 digits. d{1,4} means between 1 and 4 digits. Note that you can group characters and apply multipliers on them too. For example, the above SPL can be written as following:

index='main' sourcetype='custom-card'

| rex '(?<cardNumber>(d{4}-){3}d{4})'

Extract multiple fields

You can extract multiple fields in the same rex command.

Consider the following raw event

Thu Jan 16 2018 00:15:06 mailsv1 sshd[5276]: Failed password for invalid user appserver from 194.8.74.23 port 3351 ssh2

The above event is from Splunk tutorial data.

You can extract the user name, ip address and port number in one rex command as follows:

index='main' sourcetype=secure

| rex 'invalid user (?<userName>w+) from (?<ipAddress>(d{1,3}.){3}d{1,3}) port (?<port>d+) '

Also note that you can pipe the results of the rex command to further reporting commands. For example, from the above example, if you want to find the top user with login errors, you will use the following SPL

index='main' sourcetype=secure

| rex 'invalid user (?<userName>w+) from (?<ipAddress>(d{1,3}.){3}d{1,3}) port (?<port>d+) '

| top limit=15 userName

Regular Expression Cheat-Sheet

A short-cut

Regex, while powerful, can be hard to grasp in the beginning. Fortunately, Splunk includes a command called erex which will generate the regex for you. All you have to do is provide samples of data and Splunk will figure out a possible regular expression. While I don’t recommend relying fully on erex, it can be a great way to learn regex.

For example use the following SPL to extract IP Address from the data we used in our previous example:

index='main' sourcetype=secure

| erex ipAddress examples='194.8.74.23,109.169.32.135'

Not bad at all. Without writing any regex, we are able to use Splunk to figure out the field extraction for us. Here is the best part: When you click on “Job” (just above the Timeline), you can see the actual regular expression that Splunk has come up with.

Successfully learned regex. Consider using: | rex '(?i) from (?P[^ ]+)'

I’ll let you analyze the regex that Splunk had come up with for this example :-). One hint: The (?i) in the above regex stands for “case insensitive”

That brings us to the end of this blog. I hope you have become a bit more comfortable using rex to extract fields in Splunk. Like I mentioned, it is one of the most powerful commands in SPL. Feel free to use as often you need. Before you know, you will be helping your peers with regex.

Happy Splunking!

The stats, chart, and timechart commands are great commands to know (especially stats). When I first started learning about the Splunk search commands, I found it challenging to understand the benefits of each command, especially how the BY clause impacts the output of a search. It wasn't until I did a comparison of the output (with some trial and a whole lotta error) that I was able to understand the differences between the commands.

These three commands are transforming commands. A transforming command takes your event data and converts it into an organized results table. You can use these three commands to calculate statistics, such as count, sum, and average.

Note: The BY keyword is shown in these examples and in the Splunk documentation in uppercase for readability. You can use uppercase or lowercase in your searches when you specify the BY keyword.

The Stats Command Results Table

Let's start with the stats command. We are going to count the number of events for each HTTP status code.

... | stats count BY status

The count of the events for each unique status code is listed in separate rows in a table on the Statistics tab:

| status | count |

|---|---|

| 200 | 34282 |

| 400 | 701 |

| 403 | 228 |

| 404 | 690 |

Basically the field values (200, 400, 403, 404) become row labels in the results table.

For the stats command, fields that you specify in the BY clause group the results based on those fields. For example, we receive events from three different hosts: www1, www2, and www3. If we add the host field to our BY clause, the results are broken out into more distinct groups.

... | stats count BY status, host

Each unique combination of status and host is listed on a separate row in the results table.

| status | host | count |

|---|---|---|

| 200 | www1 | 11835 |

| 200 | www2 | 11186 |

| 200 | www3 | 11261 |

| 400 | www1 | 233 |

| 400 | www2 | 257 |

| 400 | www3 | 211 |

| 403 | www2 | 228 |

| 404 | www1 | 244 |

| 404 | www2 | 209 |

| 404 | www3 | 237 |

Each field you specify in the BY clause becomes a separate column in the results table. You're splitting the rows first on status, then on host. The fields that you specify in the BY clause of the stats command are referred to as <row-split> fields.

In this example, there are five actions that customers can take on our website: addtocart, changequantity, purchase, remove, and view.

Let's add action to the search.

... | stats count BY status, host, action

You are splitting the rows first on status, then on host, and then on action. Below is a partial list of the results table that is produced when we add the action field to the BY clause:

| status | host | action | count |

|---|---|---|---|

| 200 | www1 | addtocart | 1837 |

| 200 | www1 | changequantity | 428 |

| 200 | www1 | purchase | 1860 |

| 200 | www1 | remove | 432 |

| 200 | www1 | view | 1523 |

| 200 | www2 | addtocart | 1743 |

| 200 | www2 | changequantity | 365 |

| 200 | www2 | purchase | 1742 |

One big advantage of using the stats command is that you can specify more than two fields in the BY clause and create results tables that show very granular statistical calculations.

Chart Command Results Table

Using the same basic search, let's compare the results produced by the chart command with the results produced by the stats command.

If you specify only one BY field, the results from the stats and chart commands are identical. Using the chart command in the search with two BY fields is where you really see differences.

Remember the results returned when we used the stats command with two BY fields are:

| status | host | count |

|---|---|---|

| 200 | www1 | 11835 |

| 200 | www2 | 11186 |

| 200 | www3 | 11261 |

| 400 | www1 | 233 |

| 400 | www2 | 257 |

| 400 | www3 | 211 |

| 403 | www2 | 228 |

| 404 | www1 | 244 |

| 404 | www2 | 209 |

| 404 | www3 | 237 |

Now let's substitute the chart command for the stats command in the search.

... | chart count BY status, host

The search returns the following results:

| status | www1 | www2 | www3 |

|---|---|---|---|

| 200 | 11835 | 11186 | 11261 |

| 400 | 233 | 257 | 211 |

| 403 | 0 | 288 | 0 |

| 404 | 244 | 209 | 237 |

The chart command uses the first BY field, status, to group the results. For each unique value in the status field, the results appear on a separate row. This first BY field is referred to as the <row-split> field. The chart command uses the second BY field, host, to split the results into separate columns. This second BY field is referred to as the <column-split> field. The values for the host field become the column labels.

Notice the results for the 403 status code in both results tables. With the stats command, there are no results for the 403 status code and the www1 and www3 hosts. With the chart command, when there are no events for the <column-split> field that contain the value for the <row-split> field, a 0 is returned.

One important difference between the stats and chart commands is how many fields you can specify in the BY clause.

With the stats command, you can specify a list of fields in the BY clause, all of which are <row-split> fields. The syntax for the stats command BY clause is:

BY <field-list>

For the chart command, you can specify at most two fields. One <row-split> field and one <column-split> field.

The chart command provides two alternative ways to specify these fields in the BY clause. For example:

... | chart count BY status, host

Splunk Search Commands Cheat Sheet Download

... | chart count OVER status BY host

The syntax for the chart command BY clause is:

[ BY <row-split> <column-split> ] | [ OVER <row-split> ] [BY <column-split>] ]

The advantage of using the chart command is that it creates a consolidated results table that is better for creating charts. Let me show you what I mean.

Stats and Chart Command Visualizations

When you run the stats and chart commands, the event data is transformed into results tables that appear on the Statistics tab. Click the Visualization tab to generate a graph from the results. Here is the visualization for the stats command results table:

The status field forms the X-axis, and the host and count fields form the data series. The range of count values form the Y-axis.

There are several problems with this chart:

- There are multiple values for the same status code on the X-axis.

- The host values (www1, www2, and www3) are string values and cannot be measured in the chart. The host shows up in the legend, but there are no blue columns in the chart.

Because of these issues, the chart is confusing and does not convey the information that is in the results table.

While you can create a usable visualization from the stats command results table, the visualization is useful only when you specify one BY clause field.

It's better to use the chart command when you want to create a visualization using two BY clause fields:

The status field forms the X-axis and the host values form the data series. The range of count values form the Y-axis.

What About the Timechart Command?

When you use the timechart command, the results table is always grouped by the event timestamp (the _time field). The time value is the <row-split> for the results table. So in the BY clause, you specify only one field, the <column-split> field. For example, this search generates a count and specifies the status field as the <column-split> field:

... | timechart count BY status

This search produces this results table:

| _time | 200 | 400 | 403 | 404 |

|---|---|---|---|---|

| 2018-07-05 | 1038 | 27 | 7 | 19 |

| 2018-07-06 | 4981 | 111 | 35 | 98 |

| 2018-07-07 | 5123 | 99 | 45 | 105 |

| 2018-07-08 | 5016 | 112 | 22 | 105 |

| 2018-07-09 | 4732 | 86 | 34 | 84 |

| 2018-07-10 | 4791 | 102 | 23 | 107 |

| 2018-07-11 | 4783 | 85 | 39 | 98 |

| 2018-07-12 | 3818 | 79 | 23 | 74 |

If you search by the host field instead, this results table is produced:

_time | www1 | www2 | www3 |

|---|---|---|---|

| 2018-07-05 | 372 | 429 | 419 |

| 2018-07-06 | 2111 | 1837 | 1836 |

| 2018-07-07 | 1887 | 2046 | 1935 |

| 2018-07-08 | 1927 | 1869 | 2005 |

| 2018-07-09 | 1937 | 1654 | 1792 |

| 2018-07-10 | 1980 | 1832 | 1733 |

| 2018-07-11 | 1855 | 1847 | 1836 |

| 2018-07-12 | 1559 | 1398 | 1436 |

The time increments that you see in the _time column are based on the search time range or the arguments that you specify with the timechart command. In the previous examples the time range was set to All time and there are only a few weeks of data. Because we didn't specify a span, a default time span is used. In this situation, the default span is 1 day.

If you specify a time range like Last 24 hours, the default time span is 30 minutes. The Usage section in the timechart documentation specifies the default time spans for the most common time ranges. This results table shows the default time span of 30 minutes:

| _time | www1 | www2 | www3 |

|---|---|---|---|

| 2018-07-12 15:00:00 | 44 | 22 | 73 |

| 2018-07-12 15:30:00 | 34 | 53 | 31 |

| 2018-07-12 16:00:00 | 14 | 33 | 36 |

| 2018-07-12 16:30:00 | 46 | 21 | 54 |

| 2018-07-12 17:00:00 | 75 | 26 | 38 |

| 2018-07-12 17:30:00 | 38 | 51 | 14 |

| 2018-07-12 18:00:00 | 62 | 24 | 15 |

The timechart command includes several options that are not available with the stats and chart commands. For example, you can specify a time span like we have in this search:

... | timechart span=12h count BY host

| _time | www1 | www2 | www3 |

|---|---|---|---|

| 2018-07-04 17:00 | 801 | 783 | 819 |

| 2018-07-05 05:00 | 795 | 847 | 723 |

| 2018-07-05 17:00 | 1926 | 1661 | 1642 |

| 2018-07-06 05:00 | 1501 | 1774 | 1542 |

| 2018-07-06 17:00 | 2033 | 1909 | 1857 |

| 2018-07-07 05:00 | 1482 | 1671 | 1594 |

| 2018-07-07 17:00 | 2027 | 1818 | 2036 |

In this example, the 12-hour increments in the results table are based on when you run the search (local time) and how that aligns that with UNIX time (sometimes referred to as epoch time).

Note: There are other options you can specify with the timechart command, which we'll explore in a separate blog.

So how do these results appear in a chart? On the Visualization tab, you see that _time forms the X-axis. The axis marks the Midnight and Noon values for each date. However, the columns that represent the data start at 1700 each day and end at 0500 the next day.

The field specified in the BY clause forms the data series. The range of count values forms the Y-axis.

In Summary

The stats, chart, and timechart commands have some similarities, but you’ve got to pay attention to the BY clauses that you use with them.

- Use the stats command when you want to create results tables that show granular statistical calculations.

- Use the stats command when you want to specify 3 or more fields in the BY clause.

- Use the chart command when you want to create results tables that show consolidated and summarized calculations.

- Use the chart command to create visualizations from the results table data.

- Use the timechart command to create results tables and charts that are based on time.

SPL it like you mean it - Laura

References

Other blogs:

Splunk documentation:

- Stats command: https://docs.splunk.com/Documentation/Splunk/latest/SearchReference/Stats

- Chart command: https://docs.splunk.com/Documentation/Splunk/latest/SearchReference/Chart

- Timechart command: https://docs.splunk.com/Documentation/Splunk/latest/SearchReference/Timechart

Splunk Search Commands Cheat Sheet Pdf

----------------------------------------------------

Thanks!

Laura Stewart