- Web Scraping Using Python Beautifulsoup Free

- Web Scraping In Python With Beautifulsoup & Scrapy Framework

- Python 3 Web Scraping

- Web Scraping Using Python Beautiful Soup Pdf

- Web Scraping Using Python

Just like that, you've built your first Python web scraper! Python web scraping is not as simple as it sounds. With the help of packages like BeautifulSoup, you can do a lot of cool things. This tutorial taught you the basics of Python web scraping using BeautifulSoup.

Python web scraping is a field where you can collect data from online web pages. For many different purposes. Mostly for data mining for data analysis, data science and machine learning. However there are so many use cases for web scraping.

- Among all the Python web scraping libraries, we’ve enjoyed using lxml the most. It’s straightforward, fast, and feature-rich. Even so, it’s quite easy to pick up if you have experience with either XPaths or CSS. Its raw speed and power has also helped it become widely adopted in the industry. Beautiful Soup vs lxml.

- Web Scraping “Web scraping (web harvesting or web data extraction) is a computer software technique of extracting information from websites.” HTML parsing is easy in Python, especially with help of the BeautifulSoup library. In this post we will scrape a website (our own) to extract all URL’s.

- Python’s BeautifulSoup library is a fast and effective way to scrape data from websites.

- Mar 18, 2021 Web scraping with Python is easy due to the many useful libraries available. A barebones installation isn’t enough for web scraping. One of the Python advantages is a large selection of libraries for web scraping. For this Python web scraping tutorial, we’ll be using three important libraries – BeautifulSoup v4, Pandas, and Selenium.

Prefer video check out this python web scraping tutorial on youtube:

Web scraping is a bit of a dark art in the sense, that with great power comes great responsibility. Use what you learn in this tutorial only to do ethical scraping. In this python web scraping tutorial, we will scrape the worldometer website for some data on the pandemic.

Then do something with that data. Since this is a web scraping tutorial we will mainly be focusing on the scraping portion and only very little be touching on the data processing side of the tutorial.

We will be using a python library called beautifulsoup for our web scraping project. It is important to note that beautiful soup isn’t the silver bullet in web scraping.

It mainly is a wrapper for a parse which makes it more intuitive and simpler to extract data from markup like HTML and XML. If you are looking for something which can help you navigate pages.

Also be able to crawl websites then beautiful soup won’t do that on it’s own. However it is good to note that there are other options such as python scrapy as well. There will later on be a tutorial on scrapy as well.

Then you can actually decide which is the best for your particular project or use case. Before we can get started let us start by installing beautifulsoup. So for this we will need to create a virtual environment.

If you are using windows, mac, Linux the procedure should be very similar. So here we go:

Python beautifulsoup: installation

Let us create a virtual environment for our project. So if you are on windows open a powershell or cmd prompt. If on mac or linux open up a terminal and execute the following commands.

Where bsenv will be the folder where our virtual environment will be. You can now run:

Windows:

Linux/Mac:

This should now activate your virtual environment like this and we can now install beautifulsoup.

Run this to install on linux/mac/windows:

To test that beautifulsoup is installed. Run a python terminal and import beautifulsoup like this.

If that worked for you then great you are installed! Let us now start with the most basic example.

Beautifulsoup: HTML page python web scraping / parsing

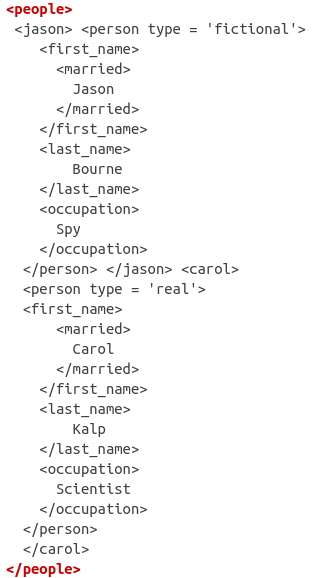

So here is an HTML example we will work with to just start with. So go ahead and paste this into your favorite editor and save it as index.html.

Once you have saved that. Create a new python script called: scrape.py. Here is the code we going to use to get some info from our index.html file.

This very basic bit of code will grab the title tag text from our index.html document. This is how the output will look if you run it.

Let’s now try do something a little more complicated like grabbing all the tr tags. Then displaying all the names in the tags.

Your output should look something like this.

To explain how this code works. We are loading up the html with a basic file read in python. We then instantiate the BeautifulSoup parser with our html data. We then do a find_all on all the tr tags.

I then loop over all the trs and find all the td children. If the td children don’t exist we skip over this item. Then finally we just get the first element and print the text contained in those tags.

So, so far so good pretty simple right. Let’s now look at getting some data using a request. The url we will be using to get some data is: https://www.worldometers.info/coronavirus/

Python web scraping: From a web page url

To be able to load up the html from the web page we will use the requests library in python and then feed that data to beautiful soup. Here is the code.

So if you run this it will output a csv set of data for us will be generated like this which we can use for some data analysis or maybe even some type of other data source.

Here is how the code works. So first thing is we import requests, so that we can make web requests using our python script. We then call requests.get to get the url and at the end choose to get the text version of the data.

So that we get the raw html data. Next we add this to our BeautifulSoup object and use the html.parser. Then we select a table with the id=main_table_countries_today.

How we find this is you will open the page in your chrome or firefox browser head over to the table, then right click and select the inspect option.

If you have done this you should be able to find this id main_table_countries_today. So once we have that we will use data_table variable we just created. Which will now contain the data table element.

Then we simply do a find all to find all the tr elements in that table. Next we loop over all our rows and just initialize an empty rowlist to store our data in. After that we open a try except block, so that if any of the elements are missing in our data we won’t error out our script.

The try except will basically skip over the critical errors so our script can continue reading the html. In the try except we do a find all for all the td tags, then finally loop over them to add the columns to our rowlist.

Outside of that loop we join them on a comma. Then outside of that loop we add the row to our datalist and then finally outside of that loop we join our datalist rows to create a csv like print out of the data.

If you look at the code, that was pretty simple to just gather some basic table form data. Scraping like this needs to really be done carefully as you don’t want to overwhelm anyone’s server.

So once again this is only for educational purposes and what you do with this is at your own risk. However for basic scraping on your own websites which you own you can collect data etc.

If you are wanting to learn web scraping it is best to do as much of it offline, so you can rapidly test and don’t have to do a request to a server the whole time.

For that you can just save a particular web page using your browser and work on that html file locally.

Let us look at another example of some web scraping before we end off this tutorial.

Python web scraping: creating a blog post feed

So you may want to create a blog post feed. For whatever reason, maybe you are building an application.

Which needs to retrieve basic data from a blog post for a social sharing website. Then you can use beautifulsoup to parse some of this data.

To show you how this can work let us scrape some data from my own blog from the home page.

So we will use the url: https://generalistprogrammer.com

So for this I have saved the html page using my browser like this.

I suggest if you want to follow along you should do the same. Please don’t do a request to my server in python. So save that as gp.html.

Now here is a pro tip. You might get this really strange error with this code.

C:Program FilesWindowsAppsPythonSoftwareFoundation.Python.3.8_3.8.1520.0_x64__qbz5n2kfra8p0libencodingscp1252.py”, line 23, in decode

return codecs.charmap_decode(input,self.errors,decoding_table)[0]

UnicodeDecodeError: ‘charmap’ codec can’t decode byte 0x8d in position 18403: character maps to

If you are getting this error, it is because our reader is not reading this as utf8. So to fix this we modify our code to look like this.

So we basically just added encoding=’utf8′. Then when you run it you will get the output of the web page.

So great you may have noticed I used the .prettify at the end that just makes this html more structured and easier to read using beautifulsoup. So let’s now follow the same procedure as before by using our inspector tool in chrome / firefox like this.

That will open up the inspector tool like this:

So this helps us find the entry point for our scraper. So we want to look at all articles. Then you will notice the article tag slightly above this tag. So let’s start with this bit of code.

Run that and you should see output like this. Which should contain html for one particular article.

So this is a nice example because there is just so much for us to collect from this html. Things like the title, date published, author, etc. So let’s start off with the title and the text of the article then move on to the date published etc.

So to summarize we basically look inside of our article then find the first h2 tag, then the first a tag and get the text for that as our title. Then the summary we look for the div element with the class which is blog-entry-summary, we then find the first p tag in there and get the text to populate our summary.

Pretty simple right? Here is what your output should look like.

So wow simple simple. Let’s try now get the publishedDate and the author.

So now it becomes a little more complicated. So author we first create an author_section variable which we find the li item with the class=’meta-author’.

Next we take that data then get the first a tag and get its text to get our author. Now where it get’s interesting is with our date section. Because our date section looks like this.

It’s not in any predefined tag. So we need to do some manipulation. To show you the issue run this bit of code.

You will notice now you get his output when you run this code.

So that is great if that is how you want it. However I want to only have the date. So to fix that we apply a replace for the string Post published: ending up with this code and this output.

So that looks good right? Well now we need to get all the blog post data on that page. So to do that we need to loop over all articles. So very simple to do this we will add this bit at the top.

Which if you run it gives us this output.

All the different little articles for the page. If you missed the part we added at the top. Here is the full code again for you to test.

So great but what about attributes?

This whole tutorial has basically now shown basic traversal, but what if we want to read some attribute data. So let me show you how using the same code we have above we can do this, but reading another part of that html.

I want to now go and add the url to the full post in our data we scraped as well. For that we will need to read one of the href attributes. Let’s look at how we can retrieve that if we look at the article html.

Here we have a class blog-entry-readmore, follwed by an a tag with the href we looking for. So let’s just add this bit of code to our script.

So pretty simple. We can use article then find the class and traverse to the first a tag then access the attributes using the “href” index in the dictionary beautifulsoup builds up for us.

So if we for arguement sake wanted the title from that same a tag we could use this code.

So really really easy to get those as well.

Another python web scraping with beautifulsoup example

What about using python web scraping for keeping an eye on our favorite stocks. Well you can easily do some web scraping for that as well. Here is a snippet of HTML as an example of data you might want to consume.

Go ahead and copy and paste this into your editor and name it stocks.html. We will now use beautiful soup to perform python web scraping on this data as well.

With this data set we want to do something a little more interesting with it and save it into a database using python.

Just to show you how you can take your python web scraping to the next level. You may one day want to add your data into a database. Where you can query and interrogate data.

Let us start off just by getting a database table working in python. To do this we will use a database called sqlite.

So to start create a dbscrape.py file. First thing we want to do is create ourselves a table. To do that start off with this code.

To quickly explain all this. We import sqlite3 we open a connection to a file called stocks.db. If it doesn’t exist yet the sqlite3 package in python will create it for us.

Then we open up a cursor, so basically a pointer to our database. We then execute a table create statement where we create a time,price,percent and change field in our table.

Finally we commit this change and close our connection.

Simple right? Great!

I like to use a plugin in visual studio code called sqlite explorer by alexcvzz. Here is what it looks like in visual studio code if you want to install it for yourself.

With this installed you can now view your database simply by right clicking it and opening the database.

Which then should look something like this.

So you can see how that is useful. Let us just add a little extra logic now we want to drop our table if it already exists so we can refresh it each time.

In order to do that just add this extra line before your create table query.

Let us create some sample data so we can see how all this will be inserted into our table.

Here is the full code again in case you missed it.

Web Scraping Using Python Beautifulsoup Free

If you check that out in your sqllite explorer plugin in visual studio code you should now see this. If you click on this play button.

Then you end up with this table:

Great so now we have a working table which can accept data let us now scrape our data from our html and put it in our sqlite table.

Here is the code to start using python web scraping on our html and putting it into our sqlite table:

So this is how it works.

- Import beautifulsoup.

- Create a our connection and sqlite table.

- We instantiate beautifulsoup with html.parser for our web scraping

- Then we find all tr in our html.

- Loop over the tr then skip over anything that is a heading or th tag.

- Within that tr we find all td.

- Then simply assign each variable to a column from each td.

- After that we execute the insert code with our data.

- Finally commit our data and close our conneciton.

Here is what you should end up with in your sqlite table when you inspect it after running this python script.

As simple as that you can combine python web scraping with persistent storage to store your data. Download software for mac sierra.

Python web scraping: use cases

So I can think of a few use cases for this. Where you maybe own an eCommerce store and you maybe need to validate prices on the store. You could use scraping to do a daily check if your pricing on the store is still correct.

If you are a researcher you can now collect data from public data feeds to help your research. Scraping when done ethically and for the right reason can have some really useful applications.

You may even want to use this for automated testing if you are a web developer. You basically create a unit test expecting a certain output on a web page and if it’s what you expect the test passes otherwise it fails.

So you could build a script to check that your web pages act in the expected way after a major code change.

You could build a scraper to help automate content creation, by collecting data off your blog which you can use excerpts in your youtube video descriptions.

Really you just need to use your imagination of what you would like to do. You could even build some nice little scripts to login to your favorite subscription website which will tell you when new content has been released.

Other things to read up about

So there are quite a few other alternatives to web scraping in python. You could use things like webdrivers which ships with the selenium library. Mostly selenium has been used for automating testing of web pages.

However because it uses browser functionality it often is quite useful. Especially when data get’s rendered via javascript or ajax and you need your scraper to be more dom aware. Then selenium might be the right choice for you.

I will be doing a tutorial on selenium web driver in some tutorials to come as well. However from my own experience I would not use selenium for large data sets, since it has overhead obviously because it runs a full browser instance.

Web Scraping In Python With Beautifulsoup & Scrapy Framework

Then you have scrapy, which is another example of a python scraping library. Which carries a little more functionality, which allows you to crawl pages more easily. As it has support built in for following links, navigation and better http requests.

It also offers better support for cookies and login sessions. So will make your life easier if you are scraping behind a logged in session. Scrapy can also handle proxies which sometimes come in handy.

Another option you may have heard about is a library which works on top of beautifulsoup called MechanicalSoup which basically extends Beautifulsoup to allow cookie and session handling.

Python 3 Web Scraping

A way for your to populate forms and submit them etc. However does not allow for javascript parsing so will not be as useful as selenium with python might be. However could help you with web scraping if you need to login first to a website to go gather data.

Web Scraping Using Python Beautiful Soup Pdf

Download flash cs4 trial mac. Web scraping on it’s own may even be a specialist field. However it doesn’t hurt to have some knowledge for when a nice project comes your way.

So there is a lot for you to learn. Just some final words, if you liked this tutorial and want to read some more of my tutorials you can check out some of them here:

Web Scraping Using Python

I also create youtube videos on the main topics of the tutorials I do you can check out my youtube channel here: https://www.youtube.com/channel/UC1i4hf14VYxV14h6MsPX0Yw